GSoC Week 12: Stay up to date

We only have a little over one week now, lot’s of things were learnt and a bunch of mistakes were made but I think I’m learning which I think is awesome! This week we’re looking at only two goals but the first goal to be honest involved a lot of thinking but I believe I was able to solve the problem. I still need to do some more testing and have to implement it for another jupyter notebook (pull_requests), but i’ll get into that later on. The second goal I started it this week but I got caught up with the first task, because the second task involves me using a different technology I have to see if it actually helps me pull the emails from the lkml (Linux Kernel Mailing List).

So this week’s task with my mentor Sean Goggins is as follows:

Goals for the week:

- Make the pipermail/github/perceval architecture to pull from the last time the pulls took place. So, if I pull at August 3, 2018 at 2:22pm UTC, I would then check that last successful run time and only pull from communication records after that time.

- Investigate non-Perceval mechanisms for getting HTML stored email lists.

Extra:

3) Upload all the pull-requests.

Goals

Goal 1:

We begin with this first goal, now as per usual I didn’t start thinking about the future so I just started off with the initial goal of just pulling the different github-issues and pull-requests and uploading it to the SQL Database. Now the problem with that is that when you’re just trying to update the database, you will have to download all the github-issues and pull-requests and reupload it to the Database.

Github-issues

Comments:

So we first go about checking to see if the the table “github_issues_2” was created (I named it this because I’m doing some testing) (line 10). This table stores all the comments in all the different issues in github repositories. If it’s in the database we count the number of lines it has because later on we will want to add more messages and we will need to set the column “augurmsgID” to the number of lines in the table and then increment that number by each new message. If the table isn’t in the Database we then have an indicator that tells us this (line 12).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

table_names = s.inspect(db).get_table_names()

print(table_names)

val = False

#mail_lists = True

git_repos = True

numb = 0

item = 1

columns2 = "augurlistID","backend_name","github_repo_url","project","last_run"

df_mail_list = pd.DataFrame(columns=columns2)

if("github_issues_2" not in table_names):

#mail_lists = False

git_repos = False

else:

SQL = s.sql.text("""SELECT COUNT(*) FROM github_issues_2""")

df7 = pd.read_sql(SQL, db)

augurmsgID = int(df7.values)+1

item = augurmsgID

Git-repositories:

Next we go about determining whether the git repositories we’re downloading the messages for has been uploaded to the Database. If it has we just determine how many repositories are in the Database and if so we add a new git repositories we will add a new row in the Database. The column “augurlistID” will have the total number of rows +1 and if even more rows are added it will keep increasing by 1.

1

2

3

4

5

6

7

8

9

10

if("github_issues_repo_jobs" in table_names):

lists_createdSQL = s.sql.text("""SELECT project FROM github_issues_repo_jobs""")

df1 = pd.read_sql(lists_createdSQL, db)

print(df1)

val = True

val = db.engine.execute("""SELECT augurlistID FROM

github_issues_repo_jobs

ORDER BY augurlistID DESC LIMIT 1""")

for i in val:

numb = i['augurlistID']

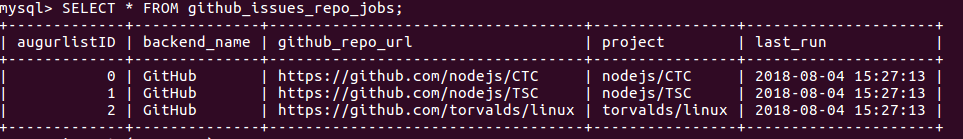

Example of how the table looks:

However if we don’t have a table with all the git repositories we’re going to download then we will then go about creating that table and have the column “augurlistID” as the primary key in the table.

1

2

3

4

5

6

7

8

9

10

11

12

13

else:

df_mail_list.to_sql(name="github_issues_repo_jobs",con=db,if_exists='replace',index=False,

dtype={'augurlistID': s.types.Integer(),

'backend_name': s.types.VARCHAR(length=300),

'github_repo_url': s.types.VARCHAR(length=300),

'project': s.types.VARCHAR(length=300),

'last_run': s.types.DateTime()

})

lists_createdSQL = s.sql.text("""SELECT project FROM github_issues_repo_jobs""")

df1 = pd.read_sql(lists_createdSQL, db)

db.execute("ALTER TABLE github_issues_repo_jobs ADD PRIMARY KEY (augurlistID)")

print("Created Table")

After the check to see if the table with the git repositories were created we pull that table and store it in a “res” which will allow us to change things in the table and then commit that change so that the table in the Database will also be changed.

1

2

3

4

5

6

7

8

Base = declarative_base(db)

class table(Base):

__tablename__ = 'github_issues_repo_jobs'

__table_args__={'autoload':True}

Session = sessionmaker(bind=db)

session = Session()

res = session.query(table).all()

You may not have realized but this structure is very similar to my jupyter notebook PiperMail the only different is that what we’re retreving github-issues instead of email’s from pipermail.

Updating

We first store the time at which we ran this jupyter notebook github-issues_3 After we start looking at actually determining if the table with the git repositories we will download for is stored in that table form example if we want to download issues from “kmn5409/GSoC” then we will see it’s not in the table and create a dataframe that stores all the git repositories we want to add to the table. This also works for if this is the first time we’re downloading issues for repositories it will just have a dataframe with all the repositories.

1

2

3

4

5

6

7

8

9

10

11

12

13

today = strftime("%a, %d %b %Y %H:%M:%S +0000", gmtime())

for y in repo_url:

repo = GitHub(owner=own,repository=y,api_token=token)

inside = own + '/' + y

if(inside not in df1['project'].values):

new = True

li = [[numb,"GitHub",'https://github.com/' + inside, inside,Piper.convert_date(today)]]

df8 = pd.DataFrame(li,columns=columns2)

df4 = df_mail_list.append(df8)

df_mail_list = df4

numb+=1

froms = None

#continue

However if we’re just downloading new issues for different repositories or adding new messages for past repositories then we store the last time we ran this jupyter notebook and update the time to when we just ran the notebook github-issues_3. Also “froms” is used to determine the last time we ran the program, if it’s the first time we have it equal to “None” else it has the last time we ran the program which is in a datetime object format.

1

2

3

4

5

6

7

8

9

10

11

12

else:

j = 0

#print(today)

time = Piper.convert_date(today)

while(res[j].project!=inside):

#print(res[j].project)

j+=1

now = res[j].last_run

print(now)

res[j].last_run = time

session.commit()

froms = now

I’ve begun to cut some parts of my code to make it easier to understand what new things I’m adding, so don’t be too surprised when you open the jupyter notebook and it has a lot more code in there.

We then download the issues or pull-request that are after “froms”, so in here we do the usual determining if it’s a pull-request or an issue. If it’s an issue we store when the issue was created and then convert that to a datetime object and if this time is after “froms”. If “froms” is “None” we append this user and his comment and issue information. Else we append the user if the issue was created after the last time we ran the program.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

for issues in repo.fetch(from_date = froms):

if 'pull_request' in issues['data']:

#print(issues['data']['number'])

continue

else:

created = issues['data']['created_at']

#print(type(froms))

issue_time = created[:10] + " " + created[11:19]

issue_time = dt.strptime(issue_time,"%Y-%m-%d %H:%M:%S")

if(froms == None):

df = df.append(df_user)

item+=1

elif(issue_time > froms):

df = df.append(df_user)

item+=1

Finally we go through the comments of the issues and we do the same thing we did above.

1

2

3

4

5

6

7

8

9

10

11

for i in range(len(issues['data']['comments_data'])):

created = issues['data']['comments_data'][i]\

['created_at']

issue_time = created[:10] + " " + created[11:19]

issue_time = dt.strptime(issue_time,"%Y-%m-%d %H:%M:%S")

if(froms == None):

df = df.append(df_user)

item+=1

elif(issue_time > froms):

df = df.append(df_user)

item+=1

One problem I have to fix is that I get kicked off from download the pull requests because my rate is exceeded when downloading from github but this was solved in github_pull_requests. I will just have to paste that code but the problem as usual means I have to wait hours for it to finish run because I have to wait for the github api key to reset.

Goal 2:

As from last week I need sometime to look at alternative ways of downloading the lkml (Linux Kernel Mailing List). I have a piece of software that has some potential but I still need some more time to focus and try using it. In general however it seems most software that pull mailing list expects it to be in MBox format and not Mailman format (HTML emails).

Goal 3:

This is a solution to last week’s problem whereby the Github rate limit would be exceeded so the program would stop. This goes about uploading 500 lines in the Database and if the limit is exceeded the program sleeps. The problem is that it sleeps for around 1 hour.

1

2

3

4

5

6

7

8

9

10

11

for y in repo_url:

repo = GitHub(owner=own,repository=y,api_token=token,\

sleep_for_rate=True,min_rate_to_sleep=500)

for issues in repo.fetch():

if(df.shape[0] > 500):

db = s.create_engine(DB_STR)

df.to_sql(name='github_pull_requests', con=db,\

if_exists='append',index=False)

df = pd.DataFrame(columns=columns1)

print("Broken")

I also encountered another problem whereby the program would be disconnected from the Database so I would have to reconnect.

1

2

3

4

5

6

if(df.shape[0] > 500):

db = s.create_engine(DB_STR)

df.to_sql(name='github_pull_requests', con=db,\

if_exists='append',index=False)

df = pd.DataFrame(columns=columns1)

print("Broken")

Resources:

GSoC ideas (Specifically Ideas 2 & 3): Ideas

My proposal: My proposal

Files Used:

Python File - PiperReader 16

Jupyter Notebook - PiperMail 12

Jupyter Notebook - Sentiment_Piper 8

Jupyter Notebook - github-issues 6

Jupyter Notebook - github_issues_scores 7

Jupyter Notebook - github_pull_requests 1

Jupyter Notebook - github_pull_requests_scores 1